Cost benefit analysis and the general logic of evaluation

Evaluators sometimes talk about cost-benefit analysis (CBA) as if it’s something foreign to evaluation. The purpose of a CBA might sometimes overlap with that of evaluation, but to some, it might seem like a strange visitor from another planet, with entirely different DNA from our Earthly evaluation approaches. I would argue to the contrary, that CBA is a form of evaluation. CBA conforms to the General Logic of Evaluation, through a sophisticated system of valuing and synthesis.

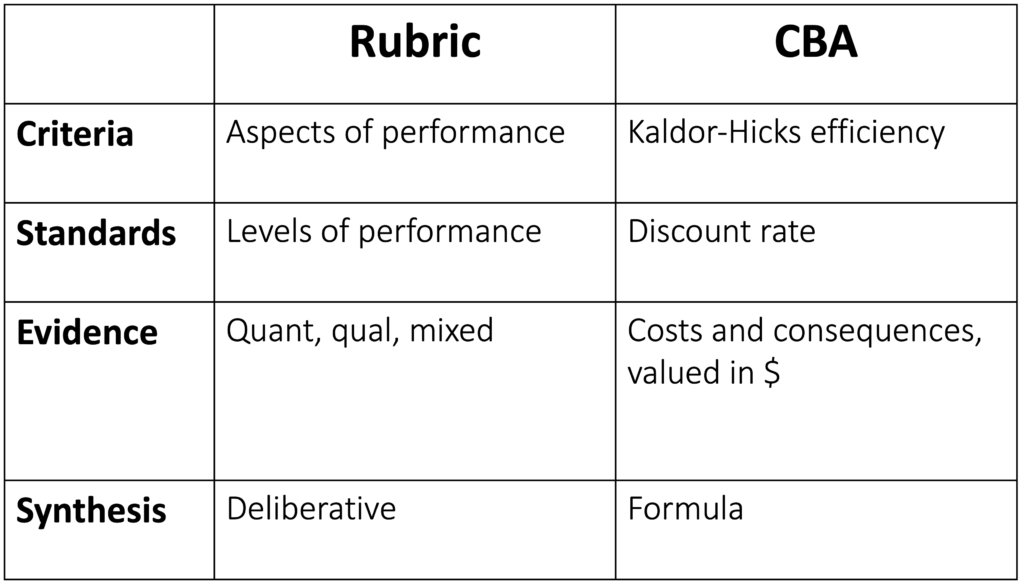

We are perhaps more used to thinking of the general logic in the way Michael Scriven, Deborah Fournier, Jane Davidson and others have described it. Indeed, when I’m not doing a CBA, this formulation of the general logic describes the way I generally practice evaluative reasoning: identify criteria (aspects of performance); set standards (levels of performance); gather and analyse evidence relevant to the criteria and standards; synthesise the criteria, standards and evidence to make an evaluative judgement (Fournier, 1995).

CBA has all these elements, but they’re not always visible, and they may look a little unfamiliar. CBA involves: identifying things of value, including resource use and impacts; quantifying them; valuing them (with money representing value); adjusting them for timing; and synthesising the evidence by aggregating the valuations to reach an overall determination of net value (King, 2017).

In rubric-based evaluation, we can have multiple criteria. For example, we might define and evaluate efficiency of delivery, cost-effectiveness of outcomes, and equity of processes and outcomes (King & OPM, 2018). Criteria are determined according to context (Schwandt, 2015), and with stakeholders (King et al., 2013).

In CBA, there is one criterion, which comes pre-packaged with the method: Kaldor-Hicks efficiency. When a policy or program makes society better off overall, by creating more value than it consumes, the criterion is satisfied (Adler & Posner, 2006).

In rubric-based evaluation, standards define levels of performance, such as ‘excellent’, ‘good’, ‘adequate’ and ‘poor’ (King & OPM, 2018).

In CBA, there is one standard. The threshold for a ‘good enough’ gain in Kaldor-Hicks efficiency is set by the discount rate, representing the value we might have obtained from the hypothetical universe of alternative things we could have done with the same resources (Drummond et al., 2005). The discount rate is adjustable, so you can move the hurdle up or down.

In rubric-based evaluation, evidence can (and some say, should) come from multiple sources, qualitative and quantitative, with methods for gathering and analysing evidence being determined contextually (King, 2019).

In CBA, evidence of performance takes the form of monetary valuations of gains and losses in utility (aka ‘benefits’ and ‘costs’). These could be determined empirically (e.g. using surveys) or by reference to proxy values such as market prices (King, 2019).

In rubric-based evaluation, synthesis is usually best approached (in my experience at least) as a deliberative process. Multiple pieces of mixed-methods evidence need to be considered, guided by the criteria and standards. This process, often one of the more challenging steps in an evaluation, involves discussion and debate among evaluators and stakeholders (King, 2019).

In CBA, synthesis is one of the easiest steps. The formula for net present value (NPV) is pretty straightforward. Once you have all the data, you can calculate NPV in a few minutes using a spreadsheet.

While both rubric-based evaluation and CBA implement the general logic of evaluation, they are quite different in terms of: what criteria are used and how they are defined; what standards are used and how they are set; what evidence is used to ‘measure’ performance and how it is gathered and analysed; how the criteria, standards and evidence are synthesised; and who is involved in each of these steps. In other words, they have different working logics (Fournier, 1995; King, 2019).

Analysing CBA through the lens of the general logic of evaluation reveals that CBA is a narrow form of evaluation that privileges one criterion, one standard, one form of evidence, and a tightly prescribed synthesis formula. These features of CBA enable it to give us important information, but not everything we need to know. A useful piece of an evaluation, but not the whole evaluation.

Importantly, you do not need to choose between CBA and rubrics. You can do both! For example, you can develop a rubric that includes Kaldor-Hicks efficiency and other criteria. You can use CBA to contribute important evidence in a mixed-methods evaluation. You can synthesise the insights from CBA, alongside a wider set of criteria, standards and evidence, to reach an evaluative judgement (King, 2019). This is the basis for the Value for Investment approach.

References:

Adler, M.D., & Posner, E.A. (2006). New Foundations of Cost-Benefit Analysis. Cambridge, Mass: Harvard University Press.

Davidson, E.J. (2005). Evaluation Methodology Basics: The nuts and bolts of sound evaluation. Thousand Oaks, CA: Sage.

Drummond, M. F., Sculpher, M. J., Torrance, G. W., O’Brien, B. J., & Stoddard, G. L. (2005). Methods for the economic evaluation of health care programs. Oxford, England: Oxford University Press

Fournier, D. M. (1995). Establishing evaluative conclusions: A distinction between general and working logic. In D. M. Fournier (Ed.), Reasoning in Evaluation: Inferential Links and Leaps. New Directions for Evaluation, (58), 15-32.

King, J., McKegg, K., Oakden, J., Wehipeihana, N. (2013). Rubrics: A method for surfacing values and improving the credibility of evaluation. Journal of MultiDisciplinary Evaluation, 9(21), 11-20.

King, J. (2017). Using Economic Methods Evaluatively. American Journal of Evaluation, 38(1), 101-113.

King, J., & OPM VfM Working Group. (2018). OPM’s approach to assessing VfM: A guide. Oxford, England: Oxford Policy Management Ltd.

King, J. (2019). Evaluation and Value for Money: Development of an approach using explicit evaluative reasoning. (Doctoral dissertation). Melbourne, Australia: University of Melbourne

Schwandt, T. (2015). Evaluation Foundations Revisited: Cultivating a Life of the Mind for Practice. Redwood City: Stanford University Press